Your LLM is frozen in time, but there are multiple ways to deal with this problem. LangChain tools are one of them. When your LLM can’t access real-time data, LangChain tools come to the rescue. If your LLM doesn’t have access to a company’s data, once again, LangChain tools come to the rescue.

In this blog, we will learn how to use LangChain tools. We will explore five different examples and master tool usage in various ways. This blog will teach you everything you need to know about LangChain tools.

Why do we Need LangChain Tools?

Language models are excellent at natural language processing, but they can’t inherently perform tasks like:

- Accessing real-time data (e.g., stock prices, news)

- Performing advanced calculations

- Retrieving factual information (e.g., from Wikipedia)

LangChain tools help language models interact with external sources, such as APIs, solving math problems, or fetching up-to-date financial data. These tools expand what your model can do beyond text generation

Let’s Move to Code

Starting with installing a few dependencies

pip install langchain_fireworks langchain

pip install -U langchain-community

pip install wikipedia

Now we also need an LLM here we will use Mistral LLM to get API from here.

import getpass, os

os.environ["FIREWORKS_API_KEY"] = getpass.getpass()

from langchain_fireworks import ChatFireworks

llm = ChatFireworks(model="accounts/fireworks/models/mixtral-8x7b-instruct")

Exploring LangChain Tools with agents

We can use LangChain Tools with or without an Agent when we use an agent we use LangChain Agent there are many agents that we can use but we will use the zero-shot react description. I have just randomly chosen it you can try others and can also read my article to learn more about LangChain Agents.

Now let’s import packages that are important to running an agent

from langchain.agents import AgentType, initialize_agent

Now, let’s install our first tool, Yahoo Finance, which fetches financial news from Yahoo and imports it.

Tool 1: Yahoo Finance Tool For Finance News

%pip install --upgrade --quiet yfinance

from langchain_community.tools.yahoo_finance_news import YahooFinanceNewsTool

After installing and importing the tool, we need to use it with an agent. Whenever we use a tool with an agent, we first create a list of the tools we want to pass. Then, we initialize the agent using the initialize_agent function, where we specify the agent type (in this case, ZERO_SHOT_REACT_DESCRIPTION) and the LLM we are using. This setup allows the agent to utilize the specified tools to handle various queries.

tools = [YahooFinanceNewsTool()]

agent_chain = initialize_agent(

tools,

llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True,

)This tool enables real-time financial news fetching from Yahoo Finance. It’s particularly useful for applications that deal with the stock market.

When you query the agent about stock updates (e.g., “What happened today with Microsoft stocks?”), it retrieves the latest news and stock performance.

Let us invoke the agent to see the output ourselves

agent_chain.invoke("bring latest news of microsoft?")Tool 2: LLM-Math for Complex Calculations

LLM-Math allows the model to perform more complex mathematical calculations than it could on its own.

tools_llm_math = load_tools(["llm-math"], llm=llm)

agent_llm_math = initialize_agent(tools_llm_math, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True)

result_llm_math = agent_llm_math.invoke("what is 50 times 25?")

print("LLM-Math Tool Result:", result_llm_math)

Output:

> Entering new AgentExecutor chain...

The user is asking me to multiply 50 by 25. I can use the calculator tool to perform this operation.

Action: Calculator

Action Input: 50 * 25

Observation: Answer: 1250

Thought:I now know the final answer.

Final Answer: The answer to 50 times 2

> Finished chain.

LLM-Math Tool Result: {'input': 'what is 50 times 25?', 'output': 'The answer to 50 times 2'}

load_tools([“llm-math”], llm=llm): This loads the LLM-Math tool, which enables the model to solve math problems. The agent can now solve the math query (“What is 50 times 25?”) by invoking this tool, rather than relying on the language model’s internal approximation of math.

Tool 3: The Wikipedia Tool

This tool helps LLM get information from Wikipedia and explain everything that it gets from Wikipedia according to your query.

tools_wikipedia = load_tools(["wikipedia"], llm=llm)

agent_wikipedia = initialize_agent(tools_wikipedia, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True)

result_wikipedia = agent_wikipedia.invoke(" cricket worldcup 2023?")

print("Wikipedia Tool Result:", result_wikipedia)

Running LangChain Tools Without Agents

We can also run tools. Now, let’s run the Wikipedia tool without an agent, but note that we run each tool differently when we aren’t using any agent.

Although tools are typically designed to be used by agents they can run independently as tools are just functions or API’s that perform specific tasks so you can also call them directly without using an agent.

However, without an agent, you’ll need to manually manage the flow of information between the tools and the LLM.

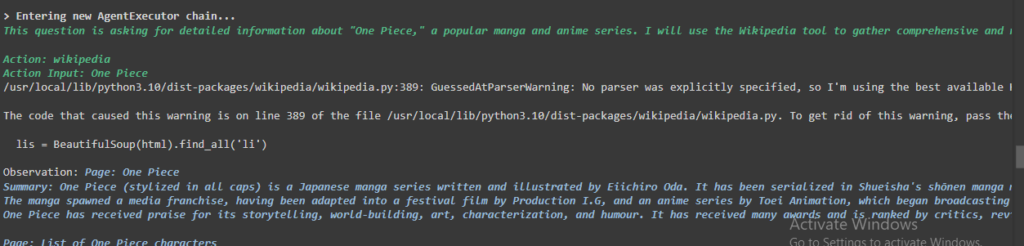

Starting with the Wikipedia tool

from langchain_community.tools import WikipediaQueryRun

from langchain_community.utilities import WikipediaAPIWrapper

#Initializing the query run with API wrapper

wikipedia = WikipediaQueryRun(api_wrapper=WikipediaAPIWrapper())

Now running the query just likle we invokewikipedia.run("One Piece")Output:

Page: One Piece\nSummary: One Piece (stylized in all caps) is a Japanese manga series written and illustrated by Eiichiro Oda. It has been serialized in Shueisha\'s shōnen manga magazine Weekly Shōnen Jump since July 1997, with its chapters compiled in 109 tankōbon volumes as of July 2024. The story follows the adventures of Monkey D. Luffy and his crew, the Straw Hat Pirates, where he .....Here we are directly initializing and calling the WikipediaQueryRun tool, bypassing any agent or intermediary decision-making system. Then we manually manage how the tool is called by importing the necessary utilities (WikipediaQueryRun and WikipediaAPIWrapper) and then we explicitly set the query run.

Let’s run a Duck Duck Go search without an agent too!

%pip install -qU duckduckgo-searchfrom langchain_community.tools import DuckDuckGoSearchRun

search = DuckDuckGoSearchRun()

search.invoke("who is kovu brandon?")Now let’s try Tavily search engines among LangChain tools unlike DuckDuckGO Tavily requires an API key to get it from the Tavily website.

Tavily is a search engine specifically designed for artificial intelligence (AI) models. It’s like a super-fast library for AI, helping them find information quickly and accurately.

%pip install -U tavily-pythonSetting up Tavily’s environment

import getpass

import osos.environ["TAVILY_API_KEY"] = getpass.getpass()Now after setting up the environment we use the below code you can use Tavily “k” here means how many results we want to see.

from langchain_community.retrievers import TavilySearchAPIRetriever

retriever = TavilySearchAPIRetriever(k=3)

retriever.invoke("who won gold medal in javelin throw olympics 2024?")Output:

[Document(metadata={'title': 'Paris Olympics: Arshad Nadeem wins javelin gold, Neeraj ...', 'source': 'https://www.aljazeera.com/sports/liveblog/2024/8/8/live-paris-olympics-indias-neeraj-pakistans-arshad-in-javelin-final', 'score': 0.99871576, 'images': []}, page_content="Pakistan's Arshad Nadeem wins his country's first Olympic medal in 32 years as he claims gold in the men's javelin final at Paris 2024. India's\xa0..."),.....Running Multiple LangChain Tools with Agent

Many times we want to use multiple tools together LangChain Agents helps us use multiple tools together it will decide according to the user’s query among LangChain tools which tool to use.

Here we will initialize two LangChain Tools separately and then we will combine them to see the magic of the agent.

from langchain_community.tools import TavilySearchResults

tools_wikipedia = load_tools(["wikipedia"], llm=llm)Here we have prepared Wikipedia to be linked into Agent since it is already prepared after the initialisation of Tavily too we will combine other concatenate Tavily with this tool so that both of them can be loaded together in Agent as a list of tools. Now let’s initialize Tavily

tool_tavily = TavilySearchResults(

max_results=5,

search_depth="advanced",

include_answer=True,

include_raw_content=True,

include_images=True,

)Now combining both tools_wikipedia which has wikipedia tool with llm loaded with tool_tavily which has tavily search engine’s tool.

tools = tools_wikipedia + [tool_tavily]Now we will provide these two tools to the agent while initialization of agent and to avoid errors you need to set handle_parsing_errors=True while initializing an agent too.

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True, handle_parsing_errors=True)

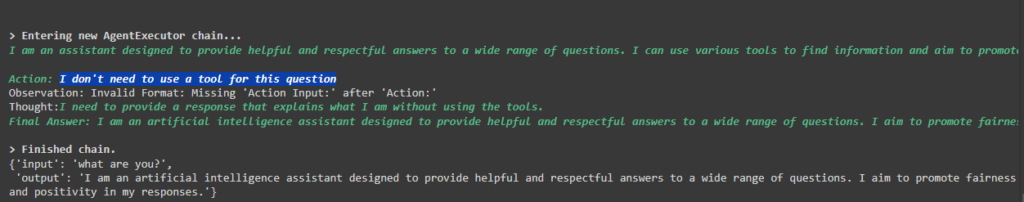

Let’s try invoking our agent with multiple tools below i am using something that llm can answer itself and it doesn’t have to use any tool at all.

It will tell you that it doesn’t need any tool to answer that

agent.invoke("what are you?")Output:

Next, we try something that will require it to use some tool as it is frozen in time and knowledge for many things needs tools so let’s try asking it for something detail for example something that can be found on Wikipedia and make it use that particular tool.

agent.invoke("tell me everything about one piece")

Output:

Conclusion:

In conclusion, LangChain Tools provides a powerful solution for overcoming the limitations of language models, allowing them to access real-time data, perform complex calculations, and retrieve factual information from external sources like APIs and databases.

Throughout this blog, we explored several practical tools for example Yahoo Finance, LLM-Math, Wikipedia, DuckDuckGo, and Tavily and saw how these tools can be used both with and without agents.

For any suggestions feel free to reach out.