Are you wondering, what is LlamaIndex and how it fits into the landscape of large language models (LLMs)? LlamaIndex, previously known as GPT Index, is a flexible framework designed to make it easier for LLMs to interact with your private data. While LLMs like GPT-4 and others are pre-trained on vast amounts of public data, they don’t automatically know anything about your specific use case or your private data—LlamaIndex steps in to bridge that gap.

This blog is your complete first step into Llama Index so don’t miss any part of it and read it completely. I am sure you are going to enjoy this short 3-minute journey of learning what is LlamaIndex.

This blog will teach you:

- What is LlamaIndex

- Working of LlamaIndex

- LangChain Vs LlamaIndex

- LlamaIndex use cases

- First code of LlamaIndex

What is LLamaIndex

So, what is LlamaIndex? LlamaIndex acts as a bridge that connects LLMs to various tools, enabling the development of LLM-powered apps.

LlamaIndex is a tool or framework that makes it easier to develop apps based on large language models (LLMs). It not only lets you use LLMs but also connects them to external tools, APIs, or documents, allowing the LLM to access and learn from information it didn’t previously know. This helps you build smarter, LLM-powered apps by feeding them new, up-to-date data.

You’ve probably used ChatGPT, right? It’s one of the most popular apps powered by a Large Language Model (LLM) trained on vast amounts of data to have human-like conversations. However, the data used to train the LLM is only up-to-date until a certain point, like 2023 or mid-2024. This means it doesn’t have access to the latest news or real-time information. Even if the model can perform web searches, it still can’t access private data, such as specific PDF files, real-time sensor data, or other updates you might need to access.

This is where LlamaIndex comes to the rescue. It helps connect LLMs, like the one powering ChatGPT and similar apps, to external tools and data. Now that we’ve explored the answer to ‘What is LlamaIndex,’ let’s see how it works to gain a deeper understanding.

How Does LlamaIndex Works

LlamaIndex has the word ‘Index’ in it because it specializes in indexing which is why it is considered faster this is how it ingests your data.

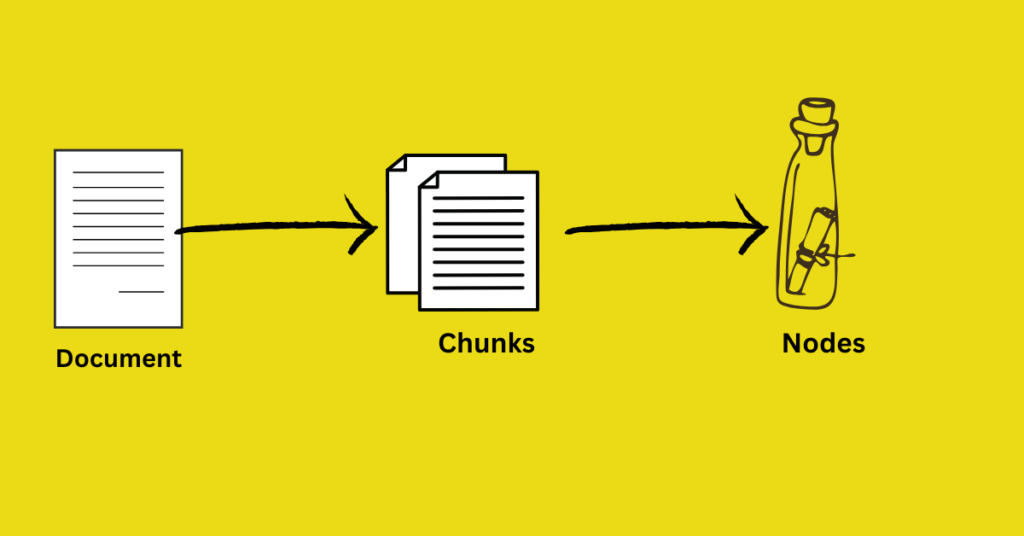

Suppose you provided your data to LlamaIndex in any format, such as PDF, Text document, or database. LlamaIndex processes your data, breaking it down into smaller chunks called nodes.

Each chunk is converted into a text node, which serves as a text container or box to store text without any other functionalities to avoid complexity and organize it well. It also defines metadata relationships with other nodes during the creation of nodes.

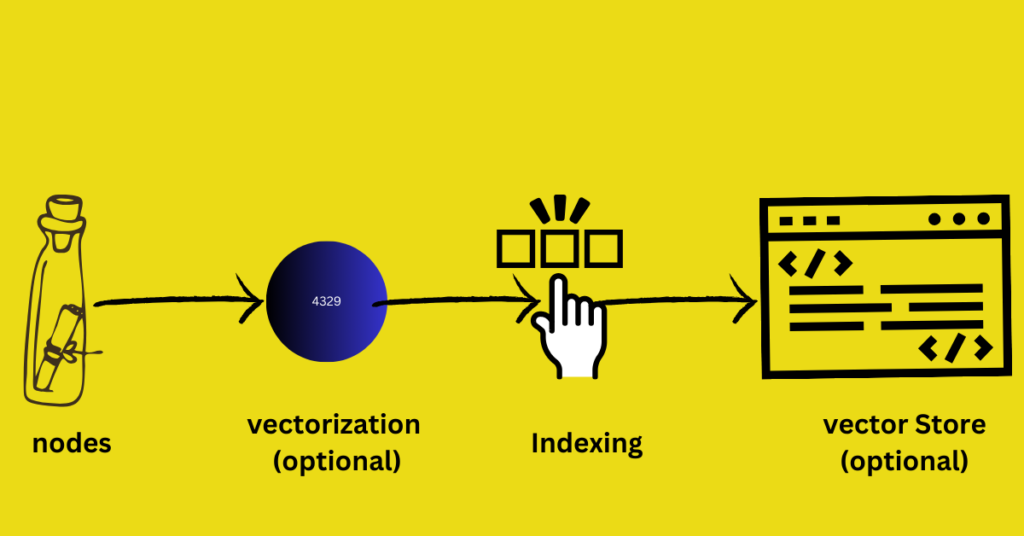

These nodes are then indexed using different methods, such as

Tree indexing: This creates a hierarchical structure of nodes, making it efficient for searching through large datasets,

Vector Indexing: This converts nodes into numerical representations (vectors) that capture their semantic meaning,

keyword table Indexing: This extracts keywords from nodes and creates a mapping for efficient retrieval.

List Indexing: This maintains a simple list of information and is suitable for straightforward retrieval tasks

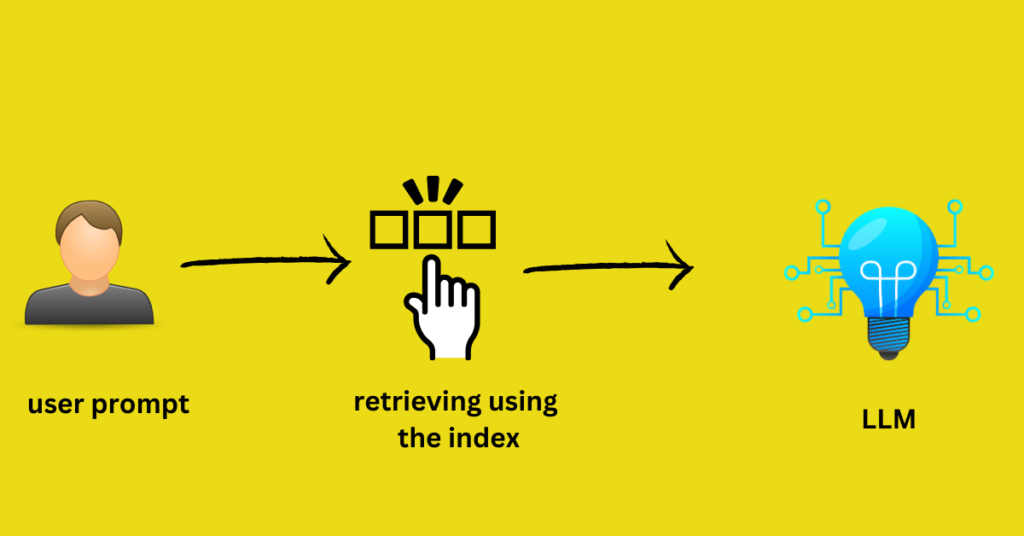

In the end, a user asks a query it searches through the indexed data using the appropriate method based on the query type. Then it passes the retrieved information to the LLM, which generates a comprehensive and informative response.

So now that you have a deeper idea about ‘What is LlamaIndex? ‘ Let’s have an even deeper idea by understanding the difference between LlamaIndex and LangChain.

LangChain VS LlamaIndex

Like LlamaIndex, we also have another framework that connects LLMs with different components to develop an app easily known as ‘LangChain’. To get a deeper idea of LlamaIndex, we need to get a sneak peek at LangChain and how it differs from LlamaIdex.

The purpose of both LLamaIndex and LangChain is the same; only the approaches are different. The key difference is that LlamaIndex specialises in optimizing data organization and retrieval for LLMs. On the other hand, LangChain is a versatile framework that excels in chaining multiple components and external tools to build advanced workflows.

You can also see the below table to know more and if you want to know more about langchain just like this blog ‘What is LlamaIndex’ you can read my blog ‘What is LangChain‘ and also LangChain Vs LlamaIndex.

| Feature | LangChain | LlamaIndex |

|---|---|---|

| Primary Focus | Building complex workflows and chains | Efficient data indexing and retrieval |

| Flexibility | Highly flexible with multi-component chains | Specialized in data integration for LLMs |

| Indexing Strategies | Limited indexing capabilities | Multiple indexing options (Tree, Vector, etc.) |

| Use Cases | Advanced task automation, multi-step processes | Document search, custom chatbots, data extraction |

| Community and Support | Larger community with more contributors | Growing community with focused support |

| Cost Efficiency | More cost-effective for complex workflows | Cost-effective for data retrieval tasks |

LlamaIndex is versatile and can used for different tasks. Now, let’s look at some LlamaIndex use cases to get an even deeper idea of what LlamaIndex is.

LlamaIndex Use Cases

The use cases below are just examples to give an idea. LlamaIndex is not limited to these and can have several use cases depending on your creativity and its marvellous capabilities.

| Use Case | Description |

|---|---|

| Question-Answering Systems | Building a retrieval-augmented generation QA systems where the LLM can access new information that it was never trained on to provide accurate and context-specific answers. |

| Document Understanding and Data Extraction | Parsing large volumes of documents to extract key information, which enables it to perform automated summarization, categorization, and insights generation. |

| Custom Chatbots | Building chatbots that can access private data, like customer information, product manuals, or internal reports, in real-time. This allows them to give personalized and accurate answers to user questions.. |

| Autonomous Agents | Developing AI-driven agents capable of making decisions and performing tasks based on specific data, enhancing automation and efficiency. |

| Business Intelligence | Facilitating natural language querying over business data, allowing non-technical users to generate reports and gain insights without complex query languages. |

| Data-Driven Assistants | Building assistants that can interact with both structured and unstructured data, providing comprehensive support for data analysis and decision-making. |

First Code of LlamaIndex

So just to get started here we will run a simple code using Firework’s LLM and LlamaIndex to have a much deeper insight into ‘What is LlamaIndex’

Starting with the installations of the Llama Index and Fireworks’ LLM

%pip install llama-index-llms-fireworks

from llama_index.llms.fireworks import Fireworks

Now we want to set up an environment for FIreworks so we have to provide fireworks’ APIkey which you can get from here.

import getpass

import os

if "FIREWORKS_API_KEY" not in os.environ:

os.environ["FIREWORKS_API_KEY"] = getpass.getpass("Fireworks API Key:")Now it’s time to run the code to end our blog on ‘What is LlamaIndex’

resp = Fireworks().complete("Paul Graham is ")

print(resp)Conclusion:

So in this article, ‘ What is LlamaIndex’ you learned ‘What is LlamaIndex’ by exploring various aspects of LlamaIdex.

First, you saw the definition of LlamaIdex to have a basic idea of what is LlamaIndex then you got an idea of ‘What is LlmaIndex’ by exploring its working, use cases and Langchain vs LlamaIndex and at the end, you saw the first code.

Thank you for reading my blog on ‘What is LlamaIndex’ I hope now you know the answer. I will keep uploading more tutorials on LlamaIndex so stay in touch and read my blogs on LangChain you can check out the LangChain series and feel free to reach out for suggestions or feedback.

Thanks once again.