This blog on LangChainb Quick Start is your first step and most essential step to learning LangChain In this guide we will learn about how to use different LLMs using LangChain we will learn how to use the famous ones from OpenAI’s model which are paid and then we will learn how to use LLM by mistral which is available for free.

so without any delay Let’s start coding!

Installations For LangChain QuickStart

So before beginning to code, we need to install different packages and dependencies for example LangChain to establish a connection between LLM and other components like user queries or user prompts, and also other LLMs that we are gonna use.

%pip install langchain

%pip install langchain-openai

%pip install -qU langchain-fireworksAfter installation of both the LLM and the framework that connects it i.e. LangChain, we need to set up the environment for our LLM with an API key let’s start with open first.

LangChain QuickStart with OpenAI

Get your OpenAI API key from here and then you can use it using different methods two of which are shown below

Use get a pass if you want to provide an API key in runtime

from getpass import getpass

import os

if "OPENAI_API_KEY" not in os.environ:

os.environ["OPENAI_API_KEY"] = getpass("OpenAI API Key:")

let’s initialise it and invoke it without passing any parameters for now.

llm = OpenAI()We use an LLM with an invoke method as shown below. We write our query in inverted commas.

llm.invoke("Who was George Washington?")Let’s see other methods of setting up the environment of OpenAI remember every method has its importance and no method is better than another it depends on your usage and requirements.

So if we want to set up the OpenAI environment in a different way where we don’t have to provide the API key in the runtime, we use the method below.

import os

os.environ["OPENAI_API_KEY"] = OPENAI_API_KEYNow we will initialise and run it the same way we did before using the code below.

llm = OpenAI()

llm.invoke("Who was George Washington")So OpenAI( ) is the standard version wrapper of openAI designed for older versions of models we want we can also pass different models and parameters If you want to pass an OpenAI model of your own choice to it then this is how you can do it you just need to initialize model within the parenthesis like this llm = OpenAI( model_name="text-davinci-003") or you can replace text-davinci-003 with other older models by OpenAI but

The above class or wrapper of OpenAI is not suitable for newer chat-based models. For that you will have to use chatOpenAI class or wrapper let’s see that one too.

While initialising any model we can also initialise it with various other parameters which you can see below read the comments to know what they do.

from langchain_openai import ChatOpenAI

chat = ChatOpenAI(

model="gpt-4o", # Specifies the model to use, in this case, "gpt-4o".

temperature=0, # Controls randomness; 0 makes responses deterministic.

max_tokens=None, # No token limit, uses maximum allowed by API.

timeout=None, # No timeout limit; waits as long as needed for completion.

max_retries=2, # Retries up to 2 times in case of failure.

api_key="...", # Directly specify API key here if not using env vars.

)Among the above parameters you might have noticed that we can also initialize our API key here if we do it here then we don’t need to do that like before.

please don’t forget to replace those three dots with your real API key or if you have already passed it then either skip this parameter or revise this parameter from here.

Every parameter is optional except for the model, which is mandatory. If we don’t provide a model, it will use the default one.

More parameters can be added here, but I have explained only six of them.

We can invoke this chat class directly like the previous one or we can pass a list of messages as an example conversation using which it can improve its output.

chat.invoke("tell me an interesting fact about Mars")Doing the same thing while passing a list of messages to direct its output.

messages = [

(

"system",

"You are a helpful assistant that teaches an interesting fact daily",

),

("human", "tell fact about Mars"),

]

chat.invoke(messages)But OpenAI’s API is paid what if we want to try some good models for free? then Fireworks comes to rescue so let’s see LangChain Quickstart with Fireworks.

LangChain QuickStart With Fireworks:

To use Fireworks we first need to install and import it just like OpenAI and then set up its environment we have already installed Fireworks now we just need to import and set up our environment. The method to set up the environment is the same as before similar to OpenAI we can set it up with any method we want.

First, get your API key from here

import getpass

import os

if "FIREWORKS_API_KEY" not in os.environ:

os.environ["FIREWORKS_API_KEY"] = getpass.getpass("Fireworks API Key:")Now creating an instance of Fireworks LLM non-chat model with instruct model by Mistral. An instruction model is a type of large language model (LLM) trained specifically to follow instructions. In contrast to regular LLMs that predict the next word in a sequence, instruct models are fine-tuned on instruction-response pairs.

llm = Fireworks(

model="accounts/fireworks/models/mixtral-8x7b-instruct",

base_url="https://api.fireworks.ai/inference/v1/completions",

)

To invoke we will use same method we will invoke it the reason is we have changed our model and class to uise those models but we are still using same framework of LangChain so that is why method will wbe same for every model or wrapperllm.invoke("tell some interesting fact about mars")Now let’s try its same model with the chatFireworks class specifically designed for chat applications but we notice this model of fireworks performs better with the ChatFireworks class.

from langchain_fireworks import ChatFireworks

model = ChatFireworks(model="accounts/fireworks/models/mixtral-8x7b-instruct")

#Now invoking just like before

model.invoke("Who was George Washington")

This wrapper along with its model can have a structured format like OpenAI since it’s a chat wrapper too even if the method is the same.

messages = [

(

"system",

"You are a helpful assistant that teaches an interesting fact daily",

),

("human", "tell fact about Mars"),

]

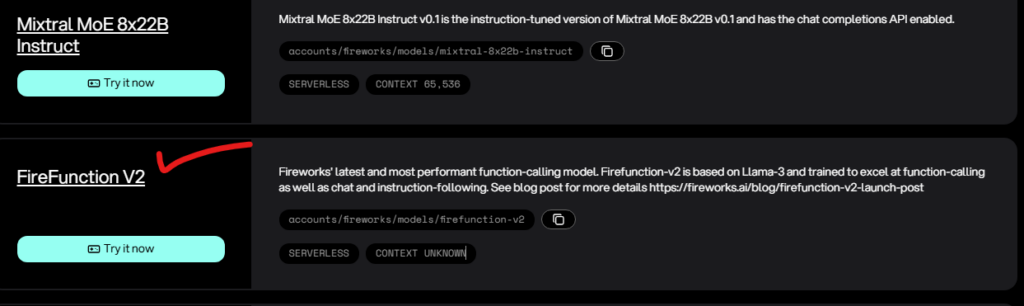

model.invoke(messages)You can explore other models in Fireworks as well. Simply search for them and replace the model with one of your choice. Here’s the link to find different models supported by Fireworks.

You can see from the photo above that I just copied the address from the link that I provided earlier and replaced the Mitral model’s address with this new model’s address.

from langchain_fireworks import ChatFireworks

model = ChatFireworks(model="accounts/fireworks/models/firefunction-v2") The structuring of messages and invoking of the model remains the same as before as shown in the code below.

messages = [

(

"system",

"You are a helpful assistant that teaches an interesting fact daily",

),

("human", "tell fact about Mars"),

]

model.invoke(messages)In Conclusion: LangChain Quickstart

In this LangChain Quickstart, we’ve walked through the essential steps to get up and running with different LLMs, including both OpenAI and Fireworks models. Whether you opt for the paid OpenAI option or the free Mistral option, LangChain makes the process straightforward and accessible.

by following this LangChain Quickstart guide, you’ve learned how to set up environments, invoke models, and experiment with various parameters to get the most out of each LLM.

So, keep this LangChain Quickstart handy as a foundation. These queries can be improved with prompt templates and chains, which are the most essential ingredients of LangChain. Your next blog to read should be LangChain Prompt Templates and LangChain LCEL after the LangChain Quickstart to learn more.

For any suggestions or queries feel free to reach out.